By Frank Miedema, PhD @MiedemaF

The Health Council of the Netherlands recently concluded that the dominant use of academic metrics had, from the perspective of the public, shaped the research agenda of the eight University Medical Centers in an undesirable way. Fields of research that are badly needed because of disease, economic and social burden appeared to be neglected over fields that are held in higher esteem from the academic perspective. Preventive medicine, public health research, chronic disease management and rehabilitation medicine have lost to genetics of autism and molecular cancer research amongst others. This is not related to excellence but due to goal displacement induced by a skewed incentive and rewards system applied to allocate credit. How did we get there and what can we do about it?

‘Criteria for Choice’

Until a few years ago it was still common enough, at least among academic scientists, to hear the viewpoint expressed that: ‘Science is essentially unpredictable and hence unplannable. The best thing to do therefore would be to give the scientist as much money as he wants to do what research he wants. Some of it would be bound to pay off, intellectually or economically or with luck both’.

One might think that this quote comes from recent debates about Open Science or about directing publicly funded research towards Grand Societal Challenges. That is very well possible, but these lines are from ‘Criteria for Choice’ by Hillary Rose and Steven Rose published in 1969. The authors point out that free research has traditionally been the perquisite of only a few but for most academic scientists has been and at best is an inspirational myth. For that minority however, it is the rhetoric to protect their vested interests in the debate on how to decide what and who’s research should be funded. A debate still hot, because of current hyper-competition for funding while society realises that science must be more responsible.

Metrics shapes science

To be able to deal with increasing competition, in a rapidly growing system there was a need for broadly usable quantitative criteria. In the past decades evaluation, especially in the natural sciences and biomedical sciences, has become dominated by the use of metrics.

Although they are poor proxies for quality, number of papers, citations, Journal Impact Factors and the Hirsch Factor are used. This is increasingly being criticized. Indeed these metrics favor basic research over fields of research that are closer to practice or historically have other publication and citation cultures. Because of strategic behavior socially and clinically most relevant research has suffered, while many young researchers went into already crowded fields that do well in the metrics

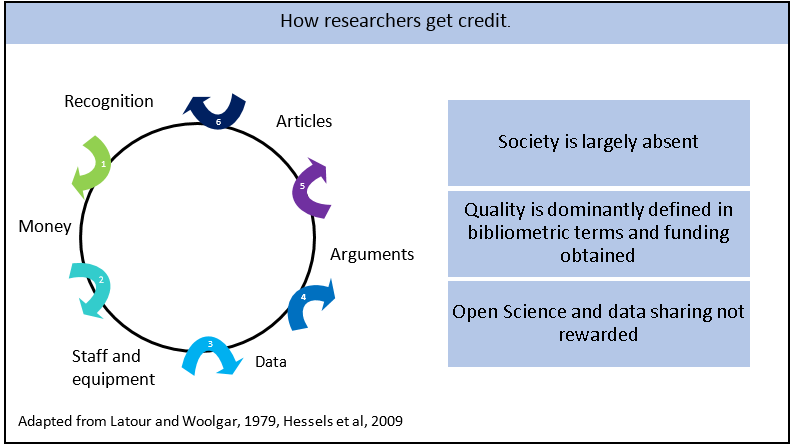

The cycle of credibility.

In the cycle of credibility all actors, deans, department heads, group leaders and PhD’s but also funders and journal editors are each optimizing their interests which are not in sync with the collective aims of science. Every year millions of papers are being published in a still growing number of journals. Since, however, investments of time and effort in quality and quality control such as peer review are not rewarded, most of them are of too poor quality. It is now widely acknowledged that we have a serious reproducibility crisis in biomedical and the social sciences at least. Despite the personal ideals and good intentions, in this incentive and reward system researchers find themselves pursuing not the work that benefits public or preventive health or patient care the most, but work that gives most academic credit and is better for career advancement.

Criteria for societal and clinical impact

Most researchers when they enter science are mainly motivated by being able to contribute to improve quality of life. Hence are quality criteria related to predicted impact of research on society, technical, economic or social, not much more or at least as important? But how to apply these fundamentally distinct criteria when evaluating science? How could one deal with it in real-life decision making at various levels? The Council, but also the Ministry of Higher Education and Science called for development of a National Science Agenda and a shift in evaluation procedures towards societal impact.

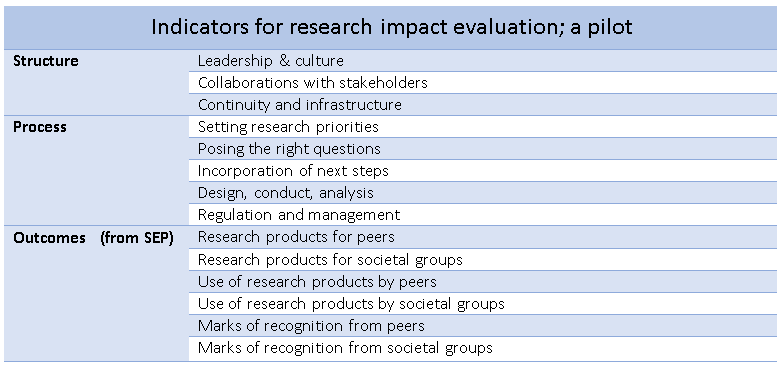

At UMC Utrecht we have done pilots with a set of evaluation criteria that focuses on processes and diverse outcomes which may include, in compliance with Open Science, data sets, biobanks and biomaterials that are shared. Evaluation implies ‘reading instead of counting’ to appreciate quality and impact. We have in addition invited representatives from non-academic societal stakeholders to sit on the expert review committees. Since researchers and staff were largely unfamiliar with these more labour-intensive evaluation practices, this was initially not met with only enthusiasm. Sarah de Rijcke (CWTS Leiden University) and her team is studying the reception of these interventions by different actors.

It is generally agreed that time needs to be spent on proper and fair quality assessment to evaluate our research in light of our mission to improve public health, cure and clinical care. It all comes down to the question: Who are we answering to? Patient and public needs or internal academic criteria for excellence and career advancement? We must and can have both.

****

Frank Miedema, PhD (ORCID ID: 0000-0002-8063-5799) is Dean and vice chair of the Board at UMC Utrecht, Utrecht University, The Netherlands. Frank Miedema is one of the founders of Science in Translation, a mission to reform the scientific system, and chair of a European Commission instigated ‘Mutual Learning Exercise’ about Open Science where issues around changes in research evaluation, incentives and Rewards are discussed with representatives from more than ten member states. He recently spoke at about new incentives for open science at the opening of the QUEST Centre in Berlin, attended by our Editor-in-chief Emily Sena. As a journal, we value research quality over novelty and support the mission to emphasise research quality and to open up a wider discussion into what science should represent.